from Cinegraphic.net:

On Synchronization in Movies

story © internetted, December 27, 2016 all rights reserved.

URL: https://www.cinegraphic.net/article.php?story=20161227085641628

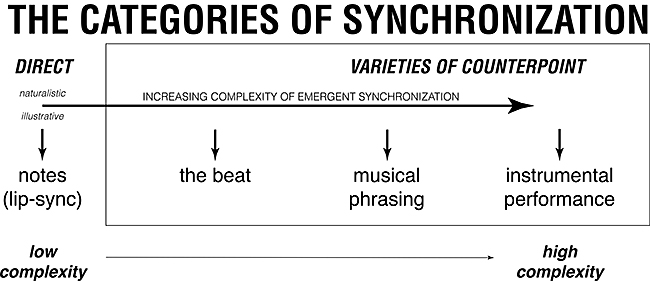

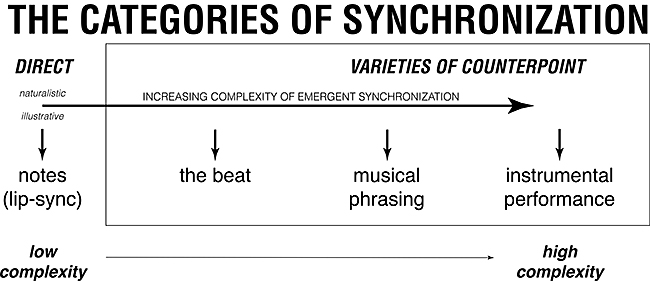

Interpreting motion pictures depends on the syntactic organization that synchronization provides via audio-visual statements that allow the parsing of a movie into distinct sections governed by past experience. All the various methodologies and aesthetic approaches of visual music fit within a spectrum where the link of sound::image increases in complexity as it moves away from direct synchronization. The two variables that define this audio-visual syntax are what provides the sync-point, and when that sync-point occurs in relation to earlier/later sync-points in both sound and image tracks. Direct and counterpoint synchronization are distinguished by the proximity and distance between audible sync-points. The visual sync-points of motion on-screen, duration of the shot/music, chiaroscuro dynamics within the frame, (or a combination of all three), complemented by a second set of audible sync-points, the most basic being the appearance of a sound (as in the ‘lip-sync’ of direct synchronization). The others, used in counterpoint synchronization all emerge over time, rather than being an immediately apparent connection: the beat, musical phrasing, or instrumental performance. These audible sync-points connect with the same set of visual sync-points, enabling the audience to identify the emergent statement of counterpoint as part of a continuum of synchronized links of sound::image that orients the soundtrack and the visuals:

This framework demonstrates the entangled and overlapping connections between the varieties of direct synchronization and the higher-level interpretations emerging as counterpoint synchronization. The shift away from direct synchronization (with its resemblance to lip-sync) and towards less immediate connections has antecedents throughout the collection of films and videos called “visual music.” The assignment of particular animated forms to match particular instruments in Len Lye’s 1935 film A Colour Box comes is an antithetical, but equally recognizable, statement to any direct synchronization. Anything that appears on-screen potentially offers a sync-point: variable motion of elements (or within them), the appearance/disappearance of forms, and the ‘cut’ where the entire image changes. The motion of elements includes any motion, even stationary (rotation, pulsation, vibration, color changes, as well as animorphs). What is significant about these transformations is that the audience must recognize them as changing—provided the audience recognizes the change, that element can be used as a sync-point.

What is typically called “visual music” is only synchronization at the lowest level of audio-visual structuring (illustrative synchronization). The sync-points used for this proximate connection between individual notes and accompanying visual elements within the image allow for a range of associations between a sound and a form (motion, chiaroscuro (color), appearance/disappearance). The distinctions between direct and counterpoint are entirely dependent on when the sync-point appears: proximity and distance differentiate between counterpoint and direct synchronization. Counterpoint’s reliance on the emergent connections apparent in the development over time renders its formal character fundamentally different from direct synchronization. The immediacy of a form synchronized to a note heard creates the illustrative linkages common to visual music films, but this directness of connection is only part of the range described in the diagram—as the distance (in time) between sync-points increases, the shift from direct to counterpoint synchronization happens. The appearance of direct synchronization and counterpoint within the same work is not unusual. The distance between a note as sync-point and the beat, measure or melodic phrase is simply a matter of degree, rather than an absolute distinction; only the apperception of synchronization creates the illusion of their radical difference.

Synchronization renders the audible subservient to the visual, reflecting the hierarchy of language-vision-hearing described by Michel Foucault’s discussion of the gaze as an ordering approach to the world. Because motion pictures have a phenomenal basis that employs the same senses we use to understand the world, our interpretations of motion pictures inevitably employ these same frameworks to understand their constructed artifice. The experiential overlap in interpretations allows the audience to glean the basic knowledge required for making the identifications of synchronization—the structural link created by identifying sound and image as forming a statement—in and from their encounter with the film itself, giving all associations of sound::image an immediacy that hides their arbitrary nature. The statement created by perceived synchronization occupies a position of great importance in relation to interpretation, since the indivisibility of the interpretation from its subject shows the movement of interpretation is from ‘lower’ to ‘higher.’ These distinctions are important for what they suggest about the typical understanding of perception-as-semiosis, as semiotician Umberto Eco noted in his book Kant and the Platypus:

if we accept that even perception is a semiosis phenomenon, discriminating between perception and signification gets a little tricky. ... We speak of perceptual semiosis not when something stands for something else but when from something, by an inferential process, we come to pronounce a perceptual judgment on that same something and not on anything else. ... The fact that a perception may be successful precisely because we are guided by the notion that the phenomenon is hypothetically understood as a sign ... does not eliminate the problem of how we perceive it. [pp. 125-126]Understanding perception-as-semiosis conceptualizes the interpretative process in a series of conscious decisions (often made semi-autonomously) about what in perception has value and needs to be attended to—an action anterior to linguistic engagement, as semiotician Christian Metz’ theory of “aural objects” demonstrates, the attachment of a name to the sound is a semiotic procedure dependent on the audience’s “past experience” that is parallel to, but independent of the relationships that comprise the identification of the apparently immanent link that is synchronization. Foucault’s identification of the statement as the fundamental unit of enunciation (i.e. the vehicle for semiosis) develops on these related, but independent levels within the title sequence: first as the synchronization of sound::image, emergent in its organization over time; then, at a higher level of interpretation, as the title sequence itself—its comprehension as a pseudo-independent paratext enabled by the identification of it as a distinct component within the film. This foundational interpretation is an identification that exceeds mere simultaneous sensory encounter: it forms these encounters into statements that then build into the more familiar and elaborate networks of relationship, indexicality and narrative. The same already-cultural foundation that defines “music” for Levi-Strauss applies to the synchronization of sound::image: as a meaningful structure synchronization is therefore dependent the recognition/invocation of the same interpretative expertise that establishes meaning for other types of semiosis. In making the identification of the ‘title sequence’ in a particular grouping of these statements, the centrality of synchronization for the unity and punctuation in many of these designs becomes obvious: the alignments of sound::image::text that compose the title design are definitive not just of its formal character, but instrumental in the identification of signification within those arrangements. ‘Cinematic form’ does not depend on isolated signs, but rather emerges from the audience’s conventional interpretations centered around these emergent statements and their development into sequences.

These shifting levels of interpretation are a function of how the statement structures and organizes the otherwise disparate phenomenal encounters with sound and image. Their mere presentational simultaneity is not enough to guarantee their comprehension as meaningfully correlated, synchronized statements. Transformation happens at different levels of complexity and immediacy in the title sequence, reflecting the role of audience interpretation through the language-vision-hearing hierarchy as a constraint on the identification and conception of the whole.